FOR508 - GIAC Certified Forensic Analyst

Date Earned: September 27, 2021

Proof: https://www.credly.com/badges/d3e6e8d6-cfe3-4cda-a235-dd8a8e2ace7e/public_url

Linky: https://www.sans.org/cyber-security-courses/advanced-incident-response-threat-hunting-training/

A pass is a pass

This post is a little different. I didn’t attend the SANS training for this before taking the exam. Reading through the syllabus on the course page, I guesstimated that my experience and some labbing specific tools I was unfamiliar with would get me over the line, but more on that below.

Before sitting this exam, my pertinent experience was largely almost 3 years as an incident response consultant at Mandiant. In my time there, I spent a whole bunch of cycles examining individual hosts and performing threat hunting across entire environments for our clients. I worked and led incidents from initial triage through to remediation.

In addition to performing incident response, I was a lead instructor for both Windows and Linux Enterprise Incident Response courses, and the Network Traffic Analysis course. Being an instructor for these courses required learning all artefacts and processes to a greater degree of competence, because you’ll never know which out-of-the-box question a student will ask. While “I’m not certain, but I’ll find out” is a valid response (as long as you do), being able to answer the question on the spot is even better.

Self-education

My first step is to enumerate all the tools, techniques, and artefacts that are covered by the SANS course and therefore are within the scope of the exam. I start by visiting the FOR508 SANS page. Findings like the below tell me I should be working with Volatility, F-Response, Velociraptor and the Comae tools to gain functional knowledge, as I may be tested on them.

Hints, hints everywhere

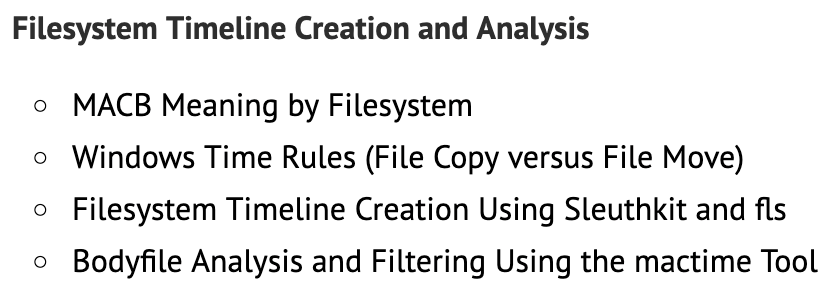

A little further down, I see that I need to learn about timestamps and how and when they get updated. I also note some additional tools I need to get good with.

Tools and artifacts! Score!

Now that I’ve noted these down, I’ll head out to the internet and do some open-source intelligence gathering (OSINT) looking for further clues. It would be immoral to obtain the courseware (and against the SANS copyright, which if discovered may lead to any instructor applications being insta-denied - no bueno!). What we can do instead though is look for indexes other students have created and posted online. This will help us flesh out our to-learn list with more nuance than the website may have provided.

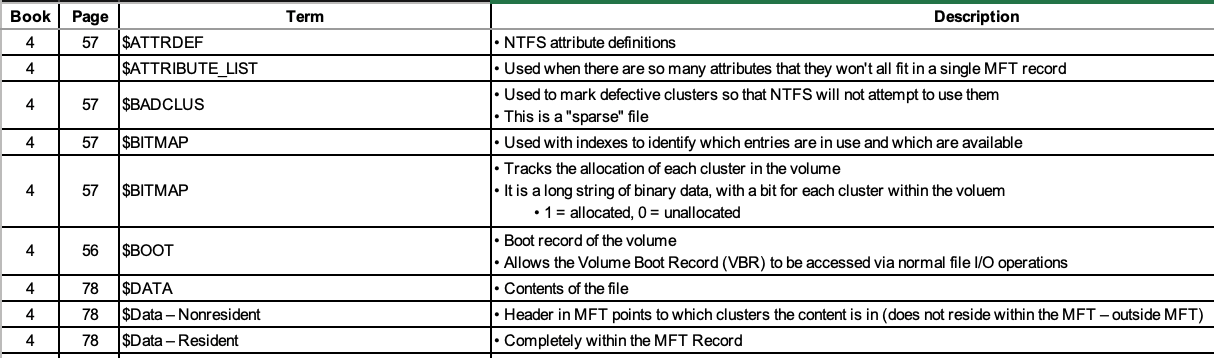

Looks like we get very deep into NTFS attributes

Now that we know what we need to know, it’s time to get learning. My thought process now was to learn the artefacts (read, theory) first, before deploying tools and getting deep into the practical. To that end, I picked up my handy dandy copy of Incident Response and Computer Forensics, 3e. For an in depth review of this book, you can see this blog entry.

For the purposes of preparing for GCFA, it gave me a timely refresher on NTFS artefacts, the incident response process, advantages of live response over dead disk forensics, etc. Everything a growing boy needs to pass this exam. As it’s a hard copy too, I can bring it in to the exam with me to reference, so even better there.

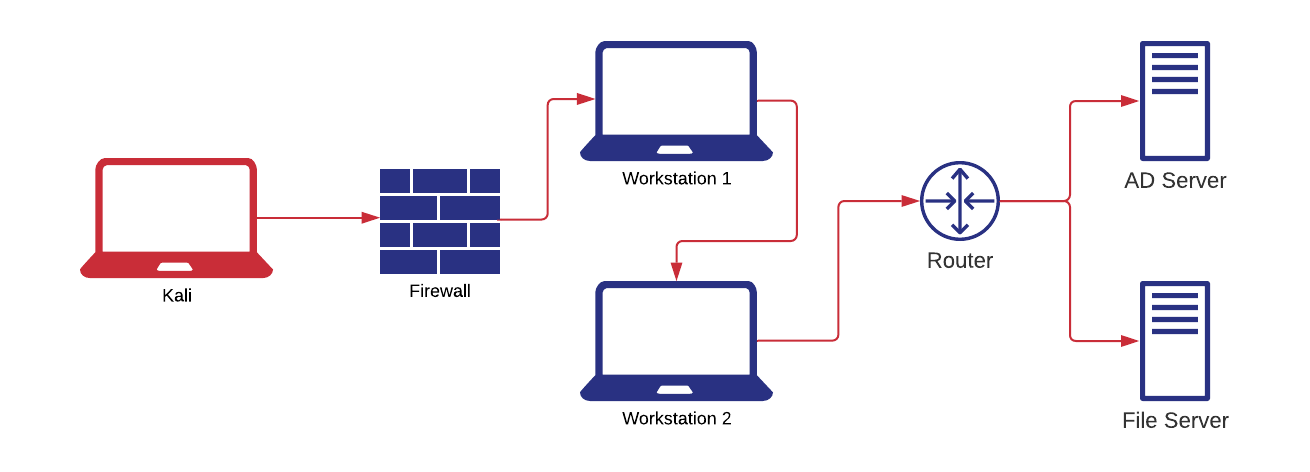

Time to get hands on! In this portion, I worked out which tools I’d be using at each stage of the incident response lifecycle. I built a small home lab with a couple of windows workstations, an AD server, a file server, and a Kali box. This let me leverage my GCIH experience to generate a compromised environment with artefacts aplenty.

Babies first compromise

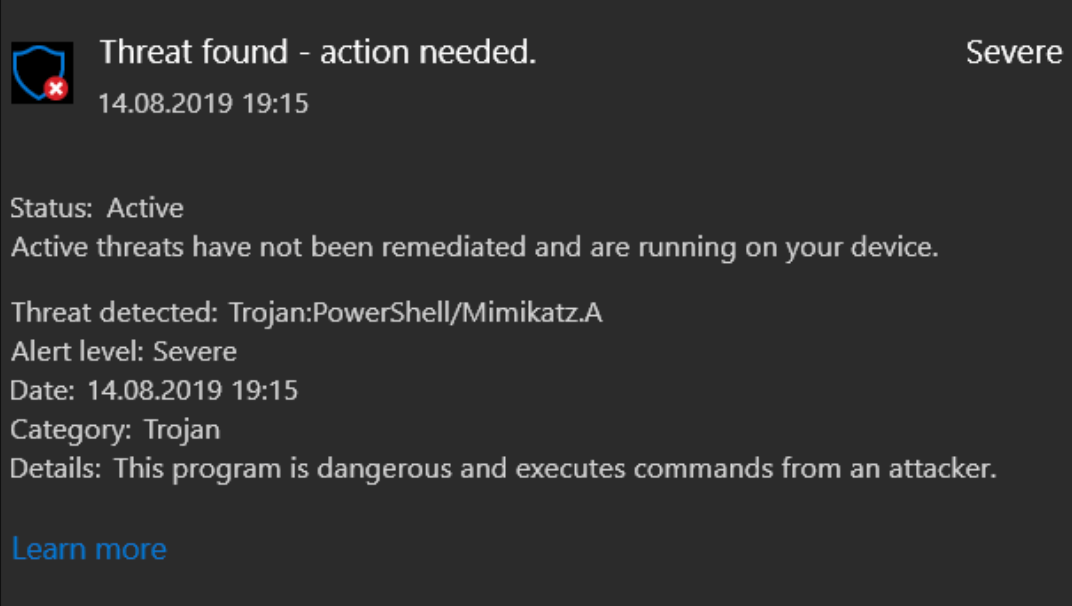

After getting in and thoroughly hosting the network, I realised for IR purposes I needed something to start with, an initial lead, if you will. I performed more and more actions before getting frustrated and just downloading vanilla mimikatz onto Workstation 2. The resulting alert became my initial lead.

One of the few times this is considered success

I had a play with Kansa to collect artefacts off the hosts. This was pretty cool, but I think it’s value really shines when you deploy it at scale. Honestly didn’t spend too much time on this outside of gaining a familiarity.

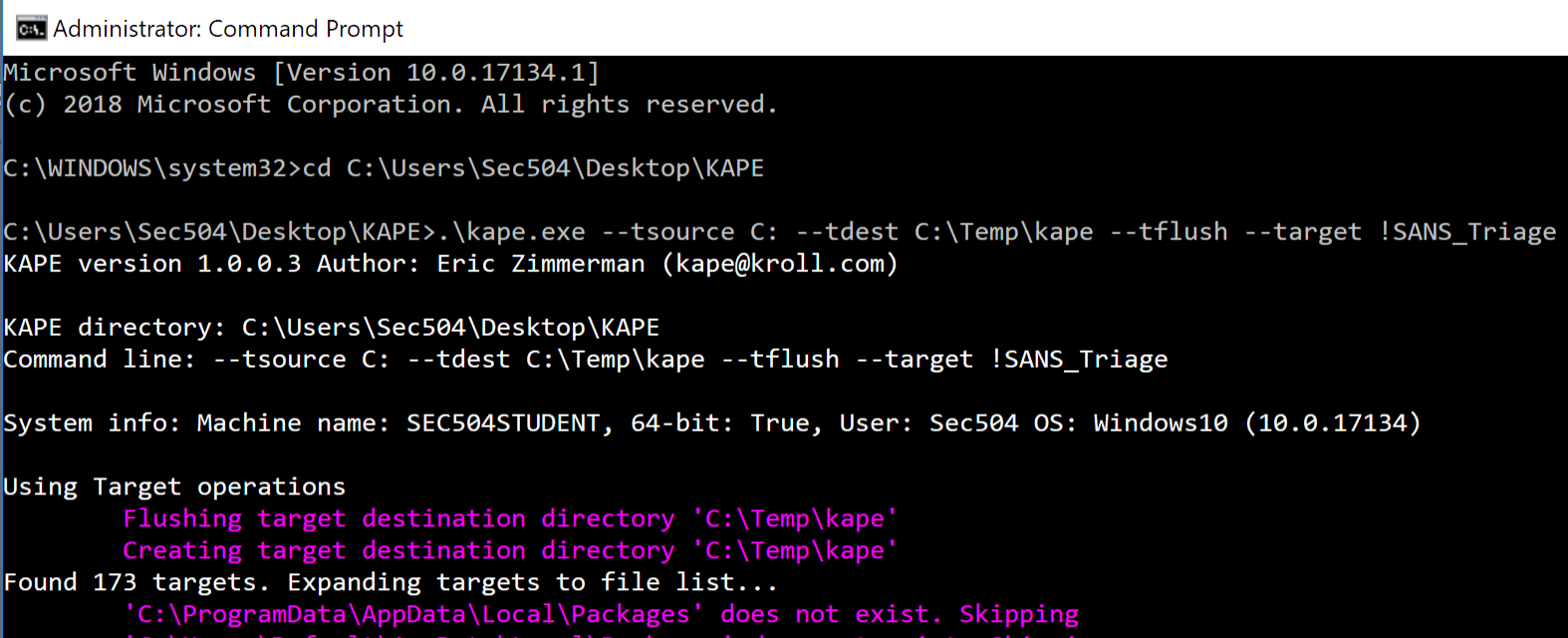

Instead, I cracked open KAPE, a fantastic tool that captures live response data from a target system, including things like the $MFT that require raw disk access. KAPE ships with a number of different pre-generated collection profiles to get you up and running super quick.

It just seemed appropriate to use the SANS Triage Package

After that, I used plaso to take our artefacts and build a nice big super timeline. For each host, I worked through the timeline and identified as many artefacts of my own activity as I could, making notes on how they tied together, and which artefact provided which information. Used a bunch of artefact specific tools here too, like PECmd to parse prefetch files. Heck, if Zimmerman wrote it, I probably used it in this phase (thank goodness for Eric Zimmerman).

Having dropped a bunch of time on memory analysis in the past, I didn’t do too much to refresh this skill set. That said, I did find two books by Andrea Fortuna very useful. There was a GCFA sketchbook which, while I suspect it was written for the previous version of the training, was very useful. Possibly more importantly, the second book is basically a manual for Volatility, which has man pages for a good chunk of the default modules. This I used a lot in the exam.

I used the two practice tests in the middle of the above, mostly to fill gaps so I didn’t need to re-do work and make sure I was on the right track. They were valuable, but didn’t identify any additional tools or artefacts that needed to be worked in.

Finally, I had a “mini index” created that was basically just a collection of SANS posters, and man pages for the likes of Zimmerman’s tools, event log types and codes, SID breakdowns, timestamps, NTFS artefacts, etc.

Certification

This was, unfortunately, the worst testing experience I’ve had to date. This was for a few reasons, only one of which was the fault of GIAC (as far as I know).

Some exam questions were a bit ambiguous. It felt like the question was being made deliberately unclear or convoluted, or tested my ability to comprehend English rather than apply technical knowledge to a problem. I feel that this was done in an attempt to make them artificially difficult.

I took the ProctorU test in the comfort of my own home. When connecting, the proctor didn’t speak to me at all, and just provided all instruction over the Logmein chat system. While this was functional, there were no instructions on what to do if anything went wrong. Bet you can’t see where this is going…

Yep, about halfway through my exam, the proctor pinged me and advised he needed to restart some things because my webcam wasn’t visible. Whatever my man, do what you need to. After about 10 minutes of poking around, I notice there’s no more interaction from the proctor. I alt-tab out to the Logmein chat, and see the session has been disconnected. Cue the nervous sweats.

Webcam is still pointing at me, and my phone is on silent on the desk behind me. There’s a chance they’re calling me to get me to re-establish a connection. There’s also a chance they have an auto-reconnect procedure, and as soon as I get up to pick up my phone, they’re going to bounce me for cheating. Decision paralysis kicked in, and I just sat looking at my screen waiting (and straining my ears to hear the vibration of my phone ringing), for about 15 minutes.

After 15 minutes, I decided enough was enough. I switched tabs out to the ProctorU sign in page, and refreshed. This allowed me to download a new Logmein package, and start again (in hindsight, I could have just re-run the executable I downloaded to start the exam, but that didn’t occur to me at the time. Shines under pressure, that’s me.)

I was connected to a new proctor and explained the situation. After re-securing the room, I was allowed to continue my exam. Problem solved, right? … almost. Despite my prompting otherwise, the proctor insisted on resuming my exam in chrome (the first half was sat in Firefox). After answering a single question, I was told I was locked out of my exam because (surprise surprise) the testing engine detected I was using a different browser. In the future I’ll be un-pinning Chrome (my personal browser) from the task bar, as this seems to cause proctors no end of confusion.

Reaching out to the proctor, this was quickly rectified, and I was able to finish my exam in relative peace. And that, friends, is how I self studied and passed the GCFA exam. If you plan to walk this path yourself, good luck! If you have any questions, you can reach me via the social buttons on the left.